Introduction

Identity in distributed applications is hard. It’s a complex matter and depending on what you are building, implementation varies. In partcular, once your application moves away from a monolith to microservices or serverless the authentication and authorization plane that once served as middleware in the monolith has adapted to a number of different patterns.

In this article, we’ll cover some of the most common ones in use. Note that these patterns are agnostic to the authentication/authorization mechanism used, so we won’t touch on those in this post.

The API Gateway/edge authentication pattern

What it is

At a high level, the API Gateway/edge serves as the termination point for token inspection, enrichment, and payload encryption/decryption. In this scenario, the edge authentication service is responsible for inspecting the tokens provided by the client and running one or more verification and modification passes on it before it reaches the end service.

There are many derived architectures from this base pattern, and some of the more common ones are noted below (some of these can be combined):

- The token used by the client is translated to an internal “secure” token, such that an attacker has fewer chances to craft a valid internal token. For instance, the internal token might be signed with a key pair that is not exposed to the public.

- The edge authentication service reaches out to an authorization server that enriches the token with scopes/roles used to enforce authorization decisions at the microservice level.

- The token propagated internally is a “passport,” which is a way to propagate the client identity information in a uniform way, especially in environments with multiple IdPs or token types.

A further variation to this is the Backend for Frontend (BFF) pattern. From an AuthN/AuthZ perspective, the BFF pattern doesn’t significantly change the logic but adds further complexity in maintaining states and logic across different gateways.

Benefits

The edge authentication pattern is advantageous in scenarios where there are multiple teams utilizing different programming languages and where there is a desire for high performance and a clear separation of responsibilities between infrastructure and application developers.

This pattern is adopted by several companies, including Netflix.

Drawbacks

This model poses two primary drawbacks:

- Lower Security Guarantees: An attacker that manages to compromise an internal service can move laterally to the rest of the system unchecked, especially in modern environments where the perimeter is at L7 instead of L4.

- Stale Authorization Decisions: This pattern also falters in scenarios where authorization decisions need immediate enforcement, as tokens carrying authorization scopes could become stale.

The middleware pattern

What it is

In this pattern, all the AuthN/AuthZ logic is enforced at the service level via middleware. Here, the authorization logic is housed in the middleware, while the authorization data is usually accessed directly from a database.

The middleware pattern used to be popular as it facilitated the simplest one-to-one migration from a monolith; however, it is becoming increasingly less common today.

Benefits

The primary benefit of this pattern is that it often represents a 1:1 migration from the monolith. A secondary benefit is that, since AuthN/AuthZ is close to the application logic, compromising a service doesn’t necessarily lead to a breach of the rest of the deployment.

Drawbacks

This model presents several drawbacks:

- Divergent Implementations: As the environment’s complexity increases, there can be a proliferation of middleware with potentially different behaviors, leading to correctness issues and potential security concerns.

- Complex Migrations: Any alterations to the structure of the tokens or the AuthN/AuthZ logic necessitate modifications to each middleware implementation.

- Lack of Governance/Inspectability: Embedding AuthN/AuthZ logic in a DB/middleware renders readability and inspectability unfeasible in organizations where security or legal teams need to inspect such logic.

The embedded pattern

What is it

Similar to the middleware pattern, in this pattern, all authentication and authorization are embedded directly in the application code.

This pattern is a less clean yet even easier way to perform one-to-one migration from a monolith; however, it is strictly inferior to the other approaches due to potential security concerns, particularly when dealing with authentication.

Benefits

The primary benefit of this pattern is that it often represents a 1:1 migration from the monolith. A secondary benefit is that the authorization logic can be embedded in the database tables, increasing performance.

Drawbacks

This model is probably the least viable one in real world environments for several reasons:

- Divergent Implementations: As the environment’s complexity increases, there can be a proliferation of middleware with potentially different behaviors, leading to correctness issues and potential security concerns.

- Complex Migrations: Any alterations to the structure of the tokens or the AuthN/AuthZ logic necessitate modifications to each middleware implementation.

- Lack of Governance/Inspectability: Embedding AuthN/AuthZ logic in DB/middleware renders readability and inspectability unfeasible in organizations where security or legal teams need to inspect such logic.

- Higher risk of vulnerabilities: Co-mingling application logic with identity logic leads to a significantly higher risk of security vulnerabilities.

The sidecar pattern

What it is

A sidecar is a recognized pattern where functionalities of an application are segregated into separate processes to provide isolation and encapsulation. A critical point to note here is that the sidecar and the application coexist in an encapsulated, trusted environment. Thus, all communications between an application and a sidecar are trusted.

This arrangement facilitates the offloading of authorization and authentication to the sidecar, allowing the incoming connection to pass through the application only after successful authorization and authentication.

This pattern is gaining popularity, with several companies like Block to JP Morgan adopting this architecture.

Envoy has recently released an extension to make the adoption of the sidecar pattern significantly easier in a service mesh.

Benefits

This model offers several benefits:

- Zero-Trust Approach: Each service enforces its authentication and authorization logic.

- Uniform Implementation: A singular implementation is applied to every service, preventing diverging implementations.

- Easier Migrations and Governance: Changes to logic or data structure can be centralized in a single place.

- No Stale Identity data: Authorization decisions are executed at the sidecar level, reducing concerns about staleness.

- Separation of concerns: The application development team doesn’t have to worry about the AuthN/AuthZ fabric of the system.

Drawbacks

The sidecar approach has two notable drawbacks:

- Management overhead: In this pattern there are more containers to monitor

- Latency: Checking signatures/policies at every microservice introduces increased latency compared to “check once”.

Implement the API server and sidecar patterns with Gate

SlashID Gate supports several deployment modes and can be deployed in conjunction with all API gateways and service meshes.

We’ll use Kubernetes clusters in these examples, but we also support out-of-the-box integrations with all major cloud vendors’ API gateways to support managed infrastructure.

Caching

As we discussed earlier in the article, one of the key considerations when deciding on the architecture is latency. Gate has a built-in caching plugin that allows us to pick and choose which URLs and plugins are allowed to cache responses and also allows us to manually override the Cache-Control policy for some URLs.

The cache is an LRU cache whose size is configurable, with a default size of 32 MB.

As an example:

plugins_http_cache:

- pattern: '*'

cache_control_override: private, max-age=600, stale-while-revalidate=300You can find the full configuration here.

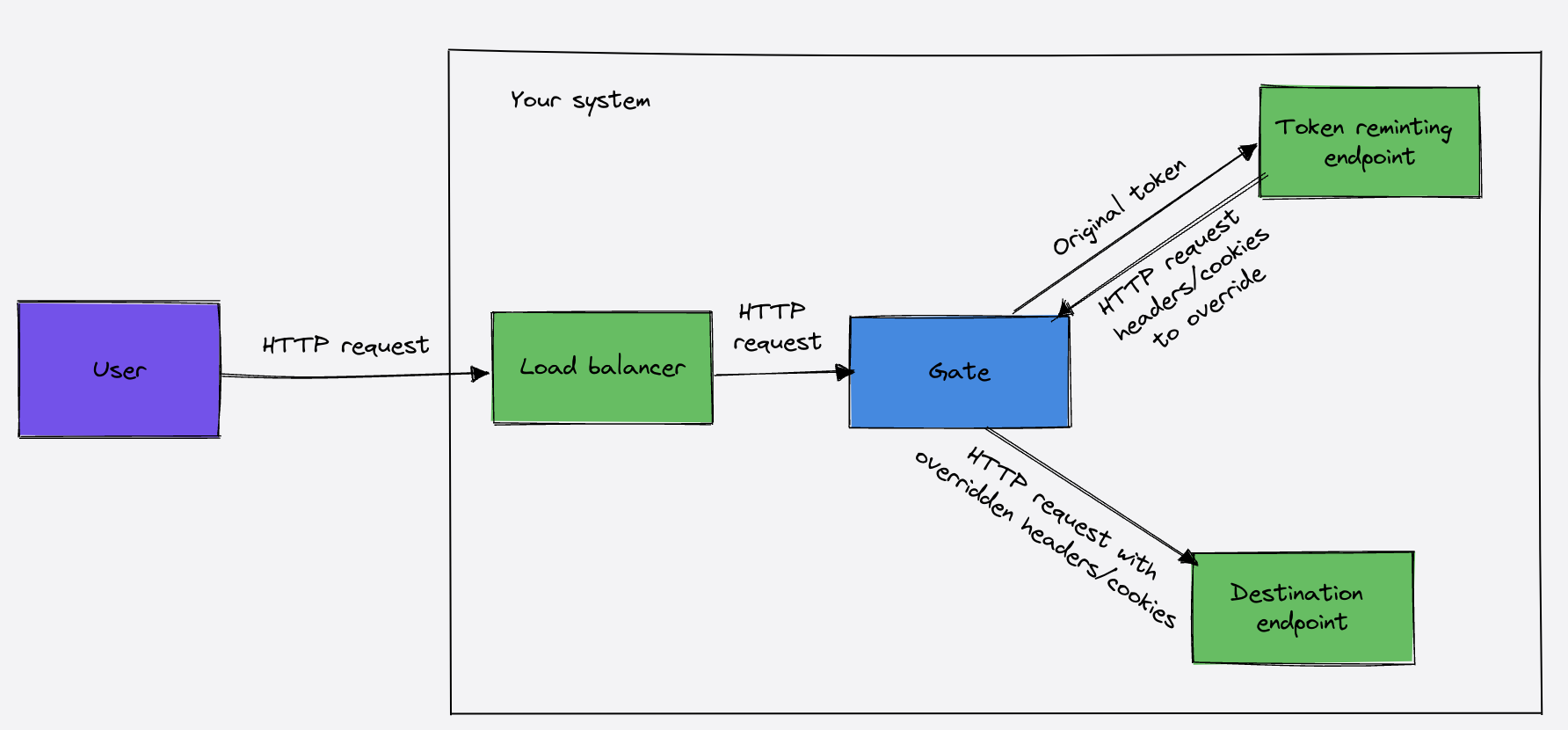

Passporting tokens

We’ve shown earlier that passporting tokens to propagate an internal token compared to an external one is a common practice used by multiple companies. Gate supports the ability to remint tokens and to add custom claims to existing tokens.

When a request comes in, Gate sends a POST request to the endpoint with the following format to the reminter webhook:

{

"token": "Token from the original request"

}A valid response looks as follows:

{

"headers_to_set": {

"Authorization": "Basic YWxhZGRpbjpvcGVuc2VzYW1l",

"IsTranslated": "true"

},

"cookies_to_add": {

"X-Internal-Auth": "9xUromfTraIwHpmC6R9NDwJwItE"

}

}By default, Gate will strip the original token from the request before forwarding. You can instruct Gate to keep the original token by setting the keep_old_token option to true. Gate will override the original request headers with the headers returned from headers_to_set, and will add all cookies from cookies_to_add to the ones already present in the request.

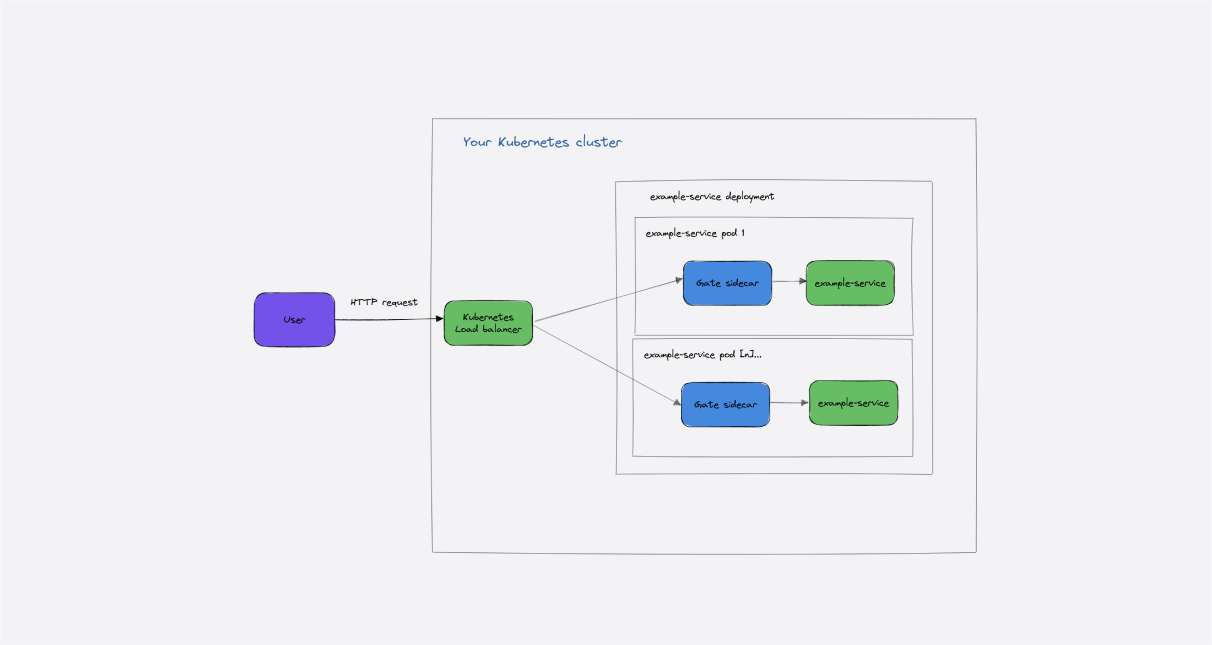

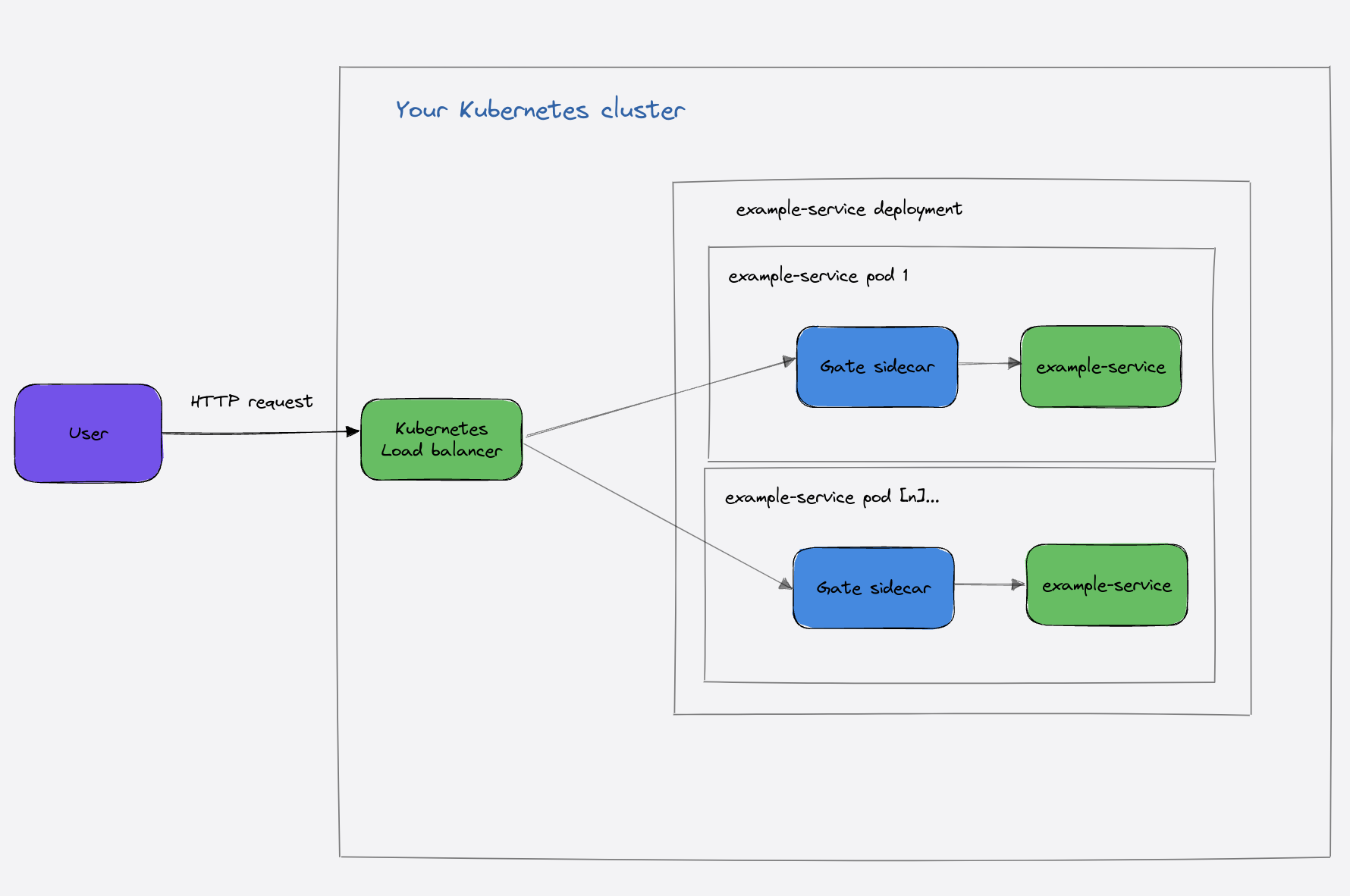

The sidecar pattern

For this example, we’ll show an example of Gate deployed as an Envoy External Authorization gRPC filter. To simplify the deployment, we’ll use Emissary-ingress as an example, but a similar configuration works for Envoy.

You can see a full deployment guide here.

At a high level, there are three key operations to the deployment:

- Deploying Gate as a service

- Deploy a

FilterandFilterPolicy - Deploy an

AuthServicefor Emissary

Conceptually, the deployment looks as follows:

Having done that, you can configure Gate as you normally would, but specifying the mode option in the deployment:

mode: ext_auth

default:

ext_auth_response: deny

port: 5000

tls:

enabled: falseFrom here on, routes and plugins are defined as usual:

- id: authn_proxy

type: authentication-proxy

enabled: false

parameters:

<<: *slashid_config

login_page_factors:

- method: email_link

jwks_url: https://api.slashid.com/.well-known/jwks.json

jwks_refresh_interval: 15m

jwt_expected_issuer: https://api.slashid.com

online_tokens_validation_timeout: "24h"

- id: authz_vip

type: opa

enabled: false

parameters:

<<: *slashid_config

cookie_with_token: SID-AuthnProxy-Token

policy_decision_path: /authz/allow

policy: |

package authz

import future.keywords.if

default allow := false

allow if input.request.parsed_token.payload.groups[_] == "vips"

...

- pattern: "*/profile/vip"

plugins:

authn_proxy:

enabled: true

authz_vip:

enabled: true

- pattern: "*/admin"

plugins:

authn_proxy:

enabled: true

authz_admin:

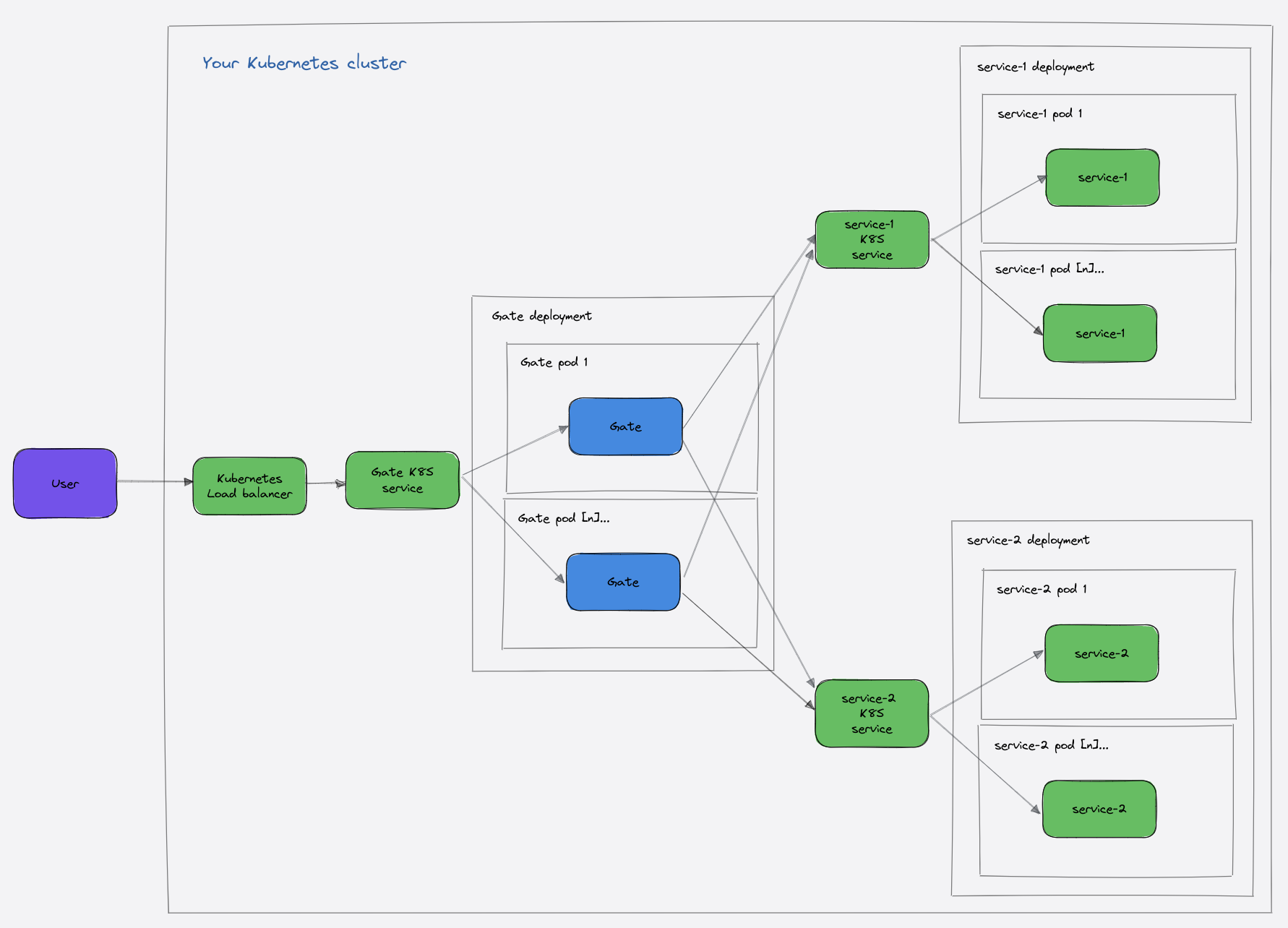

enabled: trueThe Edge Authenticaiton pattern

Continuing with the Kubernetes example, we can deploy Gate between the Kubernetes load balancer and the rest of the backend.

After deploying Gate as a Kubernetes service, the necessary step is to add a LoadBalancer service as follows:

apiVersion: v1

kind: Service

metadata:

labels:

app: gate

name: gate

spec:

externalTrafficPolicy: Local

ports:

- port: 8080

targetPort: 8080

selector:

app: gate

sessionAffinity: None

type: LoadBalancerFrom here on, routes, plugins, and the Gate configuration are defined as usual.

Conclusion

The architecture of your backend is a critical decision, dependent on the threat model, complexity, and heterogeneity of the deployment, and latency requirements.

In this article, we’ve surveyed some of the most common patterns we observe in the industry. Gate can help implement your backend identity posture without dictating a specific architecture.

We’d love to hear any feedback you may have. Please contact us at contact@slashid.dev! Try out Gate with a free account.